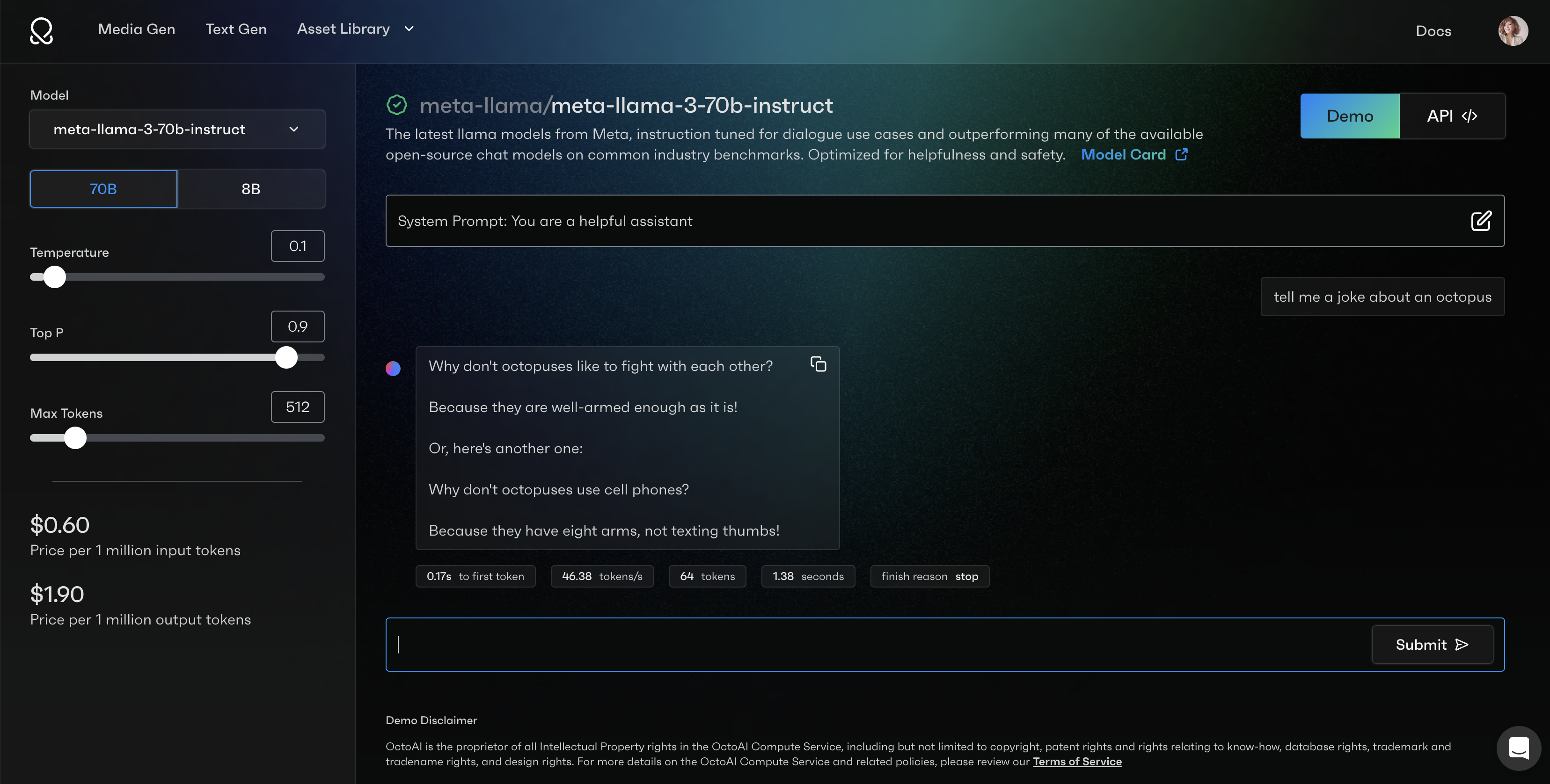

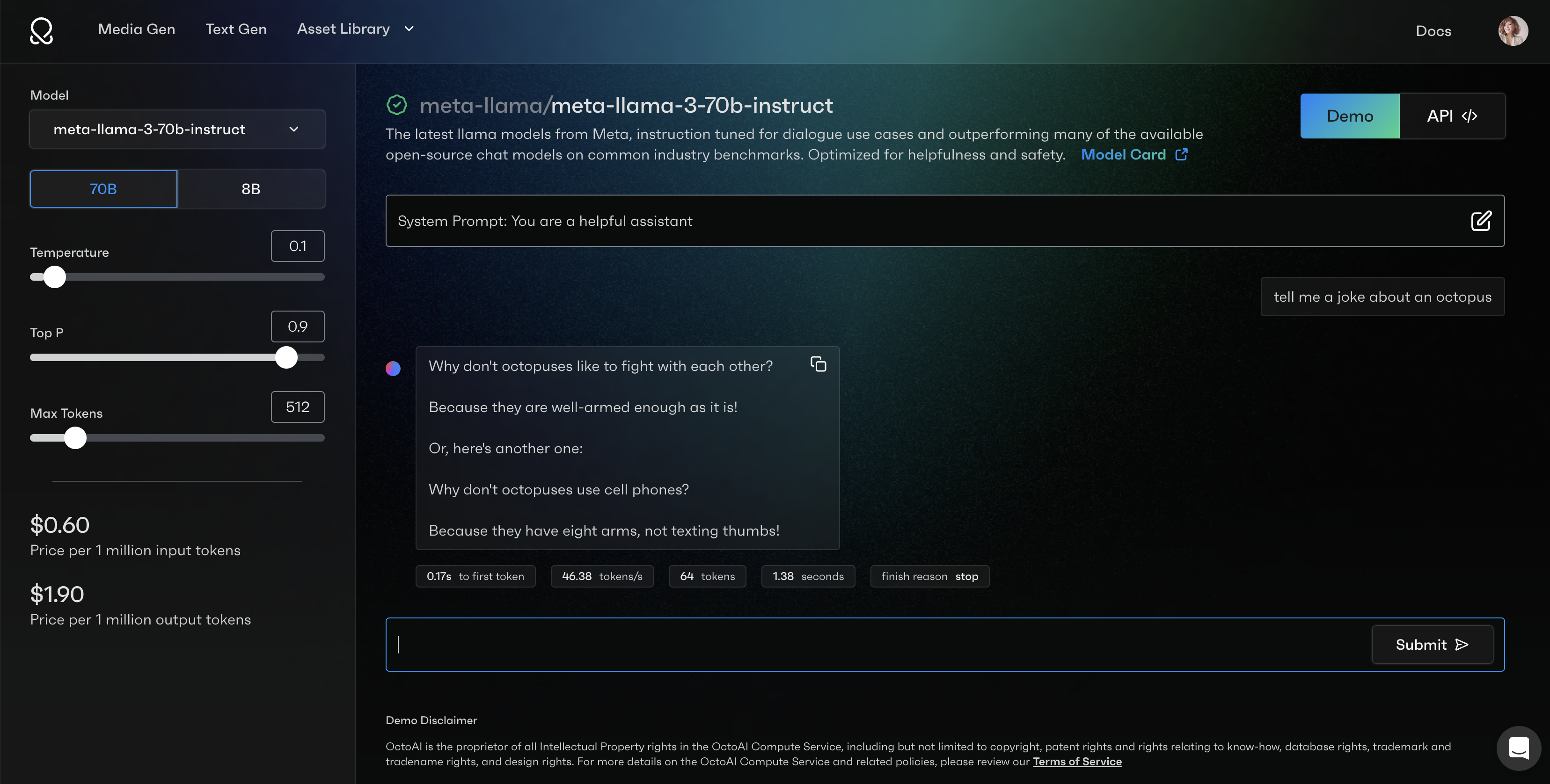

Try Meta Llama 3 via the OctoAI API

Meta unveiled two exciting additions to their Llama series–Llama 3. These new models double the context window of their predecessors (with more improvements to come) and enhance text processing efficiency. One of the most interesting outcomes from the release is the new Llama 3 8b matches the performance of Llama 2 70b, highlighting the massive leaps large language models continue to make. Both models are available via the OctoAI API under experimental tags. Meta’s Llama team included internal benchmark results and we anticipate additional community benchmarks in the coming weeks.

You can run inferences against Llama 3 8b instruct or Llama 3 70b Instruct on OctoAI using /chat/completions REST API by creating a free trial or using your existing account. If you have a fine tuned version that you would like to deploy, please contact us. We are monitoring the community fine tunes that are already being released and will be on the lookout for the best new offerings to host on OctoAI.

Prompting with chat completions API

After generating an API token, you can try out our hosted Llama 3 models with your favorite cURL invocation method including Postman, terminal command, etc. As with all new models, OctoAI’s Llama 3 models are tagged as experimental and we recommend trying them out before integrating them into a production environment.

# Llama 3 8b Instruct

curl -X POST "https://text.octoai.run/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OCTOAI_TOKEN" \

--data-raw '{

"messages": [

{

"role": "system",

"content": "You are a helpful assistant. Keep your responses limited to one short paragraph if possible."

},

{

"role": "user",

"content": "Hello world"

}

],

"model": "meta-llama-3-8b-instruct",

"max_tokens": 128,

"presence_penalty": 0,

"temperature": 0.1,

"top_p": 0.9

}'

# Llama 3 70b Instruct

curl -X POST "https://text.octoai.run/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OCTOAI_TOKEN" \

--data-raw '{

"messages": [

{

"role": "system",

"content": "You are a helpful assistant. Keep your responses limited to one short paragraph if possible."

},

{

"role": "user",

"content": "Hello world"

}

],

"model": "meta-llama-3-70b-instruct",

"max_tokens": 128,

"presence_penalty": 0,

"temperature": 0.1,

"top_p": 0.9

}'

Expanded trust & safety tooling from Meta

Meta has also released new trust and safety tools: Llama Guard 2, Code Shield, and CyberSec Eval 2. OctoAI offers Llama Guard for moderating content in Human-AI interactions. We plan on upgrading the Llama Guard endpoint in the near future. If you are interested in upgrading to Llama Guard 2, please contact us.

Stay connected on the latest open source text gen models

Join us on Discord or connect with us via social media on LinkedIn, X (prev. Twitter), and YouTube.