Get the Most from Your Data with OctoStack and Snowflake

In this article

In this article

Data is your company’s true moat. And extracting value from that data to drive real business outcomes is now getting easier, thanks to generative AI.

When most people think about Large Language Models (LLMs), the first thing that springs to mind is summarization, or ChatGPT-style content creation. But LLMs hold incredible potential to make enterprise data more meaningful, useful, and actionable.

But the biggest challenge that enterprises face is how to feed their precious data to those LLMs. Stringent data handling regulations prevent most enterprises from using ubiquitous SaaS-based model APIs provided by companies like OpenAI.

OctoStack from OctoAI allows enterprises to deploy LLMs within their environment so their data can be accessed privately and securely. I recently built a demo to show you how to use OctoStack to augment your own datasets using a technique known as Retrieval Augmented Generation, or RAG. I set up RAG using OctoStack in Snowflake’s Snowpark — a popular tool to develop data pipelines, machine learning models, and apps with enterprise data.

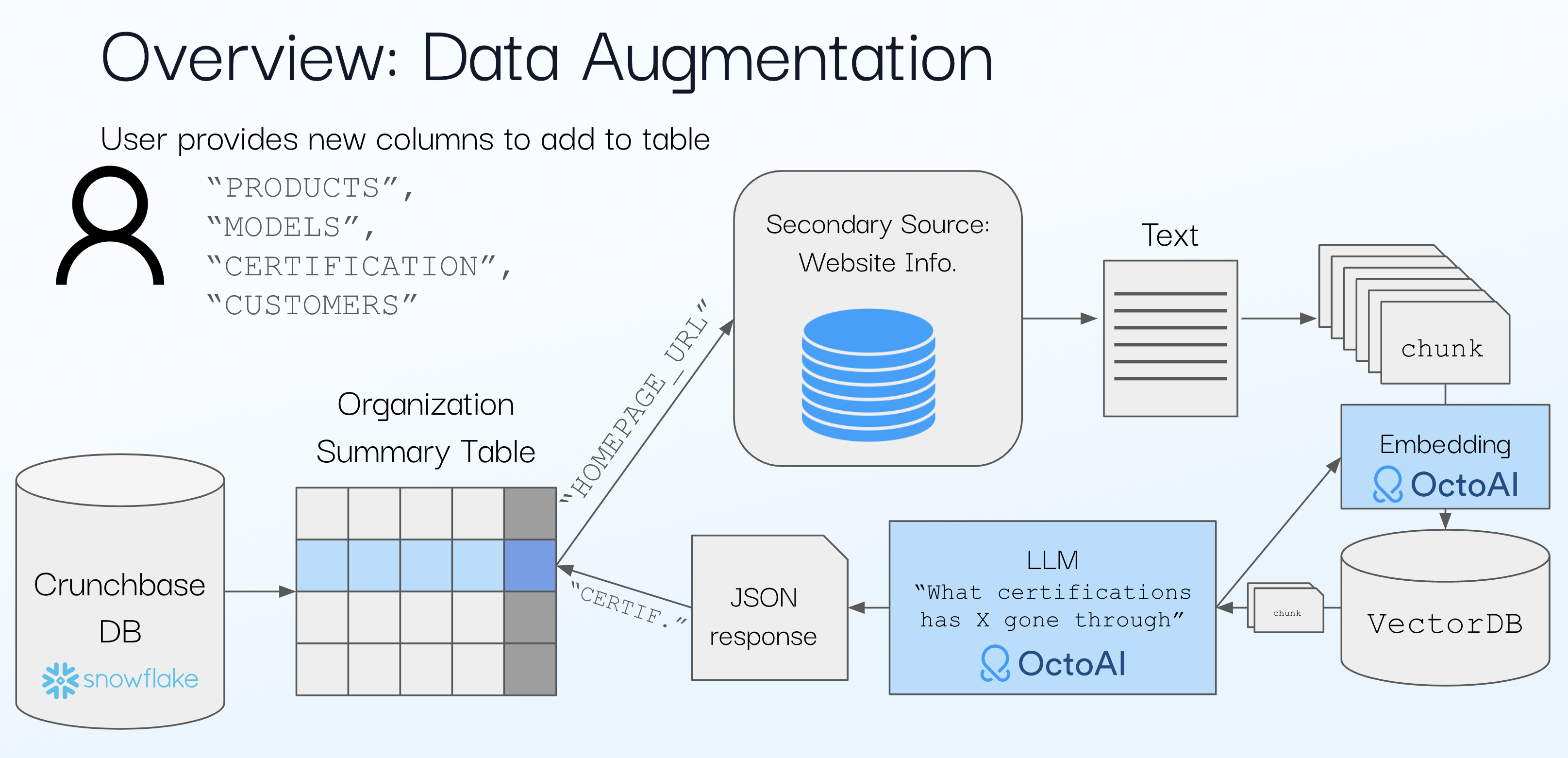

This demo is a great example of how you can augment existing datasets with new context quickly and efficiently, using LLMs. The basic workflow looks like this, but check out the video for a full deep-dive.

Data augmentation with Snowflake and OctoAI

RAG on OctoStack

We used Crunchbase as our primary dataset, which has information on more than 3 million companies. With this basic dataset, we can answer questions like: “How many AI companies are based in Seattle?”

Using this RAG-based architecture, we’ll augment that data with information scraped from company websites. Adding this secondary data source with RAG lets us obtain additional insights on the primary dataset. Now, we can ask more complex questions like, "How many Seattle-based AI startups are SOC2 certified?”

Then, we run RAG queries on each company entry, resulting in about 3M LLM calls — this grows as a function of how many columns we need to add. Assuming 256 tokens per LLM response, we’re talking close to 1B tokens generated for each column we add to our table.

In order to get our table augmented quickly it’s important that our LLM (Mixtral 8x22b) crunches that data quickly. Using an optimized serving stack like OctoStack can help you accelerate the time it takes to augment the dataset with an open source LLM by up to 4X over state-of-the-art DIY implementations.

It’s also important that the LLMs generate reliably accurate answers that are properly formatted for consumption. There are two ways OctoStack helps with this. First, we serve leading open-source LLMs like Llama3, Mistral, and Mixtral, which rival proprietary models in quality benchmarks.

Second, we offer guaranteed JSON mode formatting to make LLM responses easily processable. In the data augmentation video, we use JSON mode to format the LLM response properly and store it into the table.

Why OctoStack instead of a SaaS API?

In this demo, I used publicly available data, but you can augment and enrich data with any enterprise data source: documents, CRM data, call logs, emails …really any use case where data privacy is paramount. With OctoStack, you can run the entire RAG architecture — the embedding model, LLM, vector database — all within your own private environment.

By bringing the compute processes closer to where the data resides, OctoStack mitigates the risks associated with privacy and compliance risks related to data transfer and storage. This proximity not only enhances security but also improves the responsiveness of AI applications. Enterprises can execute data-intensive operations like analytics and machine learning without the latency that often comes with cloud-based data processing.

In conclusion, OctoStack is not just another tool; it is a strategic asset for any data-driven company looking to leverage the power of generative AI while ensuring data remains secure within their control. This approach doesn’t just comply with privacy requirements—it embraces them as a feature, turning potential limitations into strengths that drive business forward. As we continue to enhance OctoStack, our commitment remains clear: to deliver an AI integration solution that is secure, efficient, and profoundly transformative.

You can book a one-on-one demo of OctoStack with our Engineering Team to learn more.

Related Posts

OctoStack is a production-ready GenAI inference stack that allows enterprises to efficiently and reliably serve models inside their environment.

Together, OctoAI and Unstructured.io provide a solution for data challenges, enabling users to derive meaningful insights from vast amounts of information.